Customer satisfaction survey — Everything you need to know

This article started with a question from a member of one of the business groups. It can be found in the main graphic.

Suppliers of products and services often repeat the mantra, “The customer must be satisfied”. They expect this kind of feedback from their customers. But is it really like that? Do customers have to be satisfied? Should we even ask about their satisfaction? If so, then:

- What should we ask about?

- When should we ask?

- Who should we ask?

What do you want to know? Customer satisfaction survey

User satisfaction is a bit like show business: if they don’t talk about us, it means that the show flopped. In general, all teams:

- marketing

- sales

- customer service

- production etc.

Everyone wants to hear about customer satisfaction.

From the UX perspective, I can say:

I prefer a customer who says, “It’s good, but if you tweak it, it’ll be even better.”

But what do customer satisfaction surveys give us?

By asking empathetic questions and lending a sympathetic ear to the user, we gain customer trust and loyalty. We all like to have an opinion. We like to be heard. And we don’t get that feeling by clicking on stars or emojis. Because what if it’s not good…

I’m not an enemy of this method, but… — What is NPS?

I have to admit that I often underestimate the NPS metric for measuring customer satisfaction. The Net Promoter Score (NPS) is based on a standard question: “Would you recommend us to your friends?”.

As you can see, it doesn’t inquire about how satisfied you are with your interactions with the brand. It only examines the recommendation aspect in a very limited manner.

Satisfied customers or trying to please us?

Focusing solely on NPS narrows our business perspective. Instead of accurately assessing the client’s sentiment, it may simply reflect their effort to avoid upsetting us. This type of question frequently constrains our responses, leading us to aim for the middle ground when responding.

Remember! Users avoid selecting extreme answers!

The answer is not in the middle — How to calculate it

In surveys that use scales, it’s important to note that extremely positive and extremely negative responses occur less frequently, as they usually require intense emotions.

Users are 50% more likely to give an extreme rating after a bad experience rather than a good one. We talked about this phenomenon in an episode of our Design and Business podcast.

Such questions might reduce the effectiveness of the customer satisfaction survey

It may turn out that, with an average rating of 4.2, positive ratings may have been affected by a small group of highly dissatisfied users. Or we may have an equal number of excellent and poor ratings.

Customer satisfaction survey using CES

That’s why I prefer CES, which is based on the range (median) of responses. Customer Effort Score captures a broader perspective.

It asks: How easy was it to use the service or perform an action using our products?

Answers are scored on a scale of 1 to 7. The result is displayed on a scale from 0 to 100, with the scores being calculated in groups. For example: if 65 customers out of 100 rated the quality of our service as 5, 6 or 7 on a 7-point scale, the CES score would be 65. Additionally, tracking CES over time allows us to observe how implemented changes or iterations affect customer satisfaction. We can also identify what raises and lowers the metrics.

Tip: You may learn more if you choose CES as your preferred customer satisfaction survey.

How to ask about customer satisfaction?

A good product is easy to use. When it comes to customer satisfaction surveys, the same principles should apply to templates. Questions about the quality of service and its reception should reach not only satisfied customers but also dissatisfied ones. So let’s be honest with the user and with ourselves.

Here are a few simple rules for when we want to create a customer satisfaction survey:

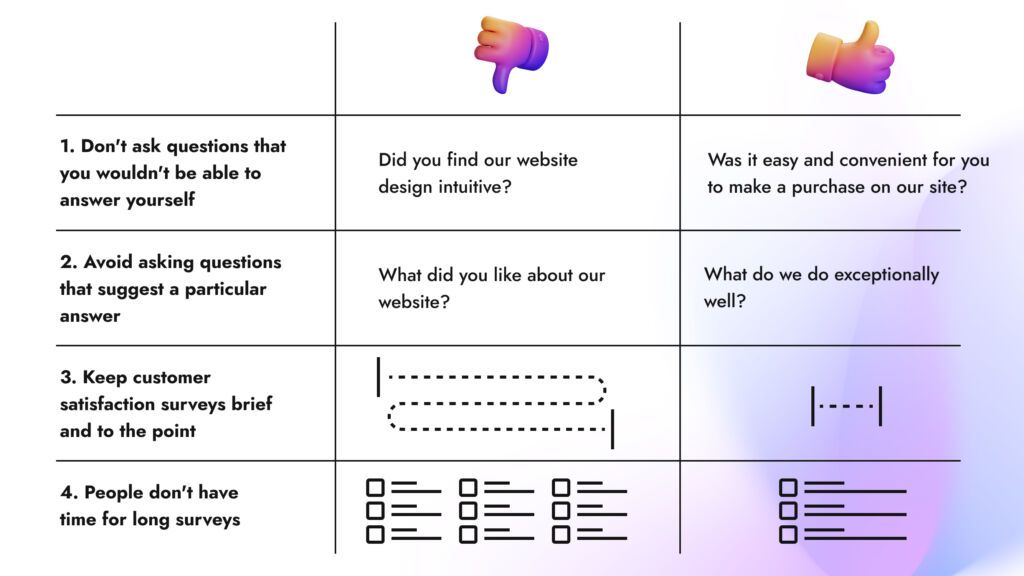

1. Don’t ask questions that you wouldn’t be able to answer yourself

It may seem strange, but there are surveys that we abandon because we do not understand the questions.

There is a rule: the simpler, the safer.

If we want to understand what the customer is happy with, we should ask them directly, in their own words.

Anti-examples of customer satisfaction questions:

Have I really “verified my online purchase”? Or should I just say whether I felt safe paying my bill online?

Also, avoid industry jargon: “Did you find our website design intuitive?”. After all, not every customer of yours is a UX designer!

Instead, ask: “Was it easy and convenient for you to make a purchase on our site?”.

2. Avoid asking questions that suggest a particular answer

As users, we are wary of tactics like the infamous joke from the Communist Block: “Who is your role model and why Stalin?”. Suggesting an answer to a question not only makes it difficult to get an honest opinion, but it also undermines the research. Don’t try to influence the result. Don’t focus on what you want to hear.

Anti-example of suggesting an answer:

The popular question: “What did you like about our website?” followed by a series of answers to choose from. I wouldn’t expect any of those options to be “Nothing”. And yet, we could across such an opinion.

Rather, let’s ask: “What are we doing well?”. This will provide you with clear information about the product or feedback about your company and its strengths. It can also test if new features or elements generate the expected enthusiasm.

3. Keep your customer satisfaction surveys short and to the point

Too long questions can be a nightmare in surveys.

Why?

- They don’t display well on mobile devices (which are already replacing computers).

- They cause respondent fatigue. If I don’t have the patience to read the question to the end, I won’t answer it. The more long questions you ask, the less likely it is that the customer will even remember your brand or rate it positively.

4. People don’t have time for long surveys

If you promise the user that the survey will take three minutes, make sure to keep that promise. This will build more trust.

For your survey, I recommend including three to four questions. This provides the best opportunity to collect a complete set of answers, reducing the likelihood of people dropping out midway and resulting in a more dependable outcome.

Very bad anti-example:

I must mention the famous customer satisfaction survey from one of Bethesda’s video games. The player who wanted to unsubscribe could explain their reasons to the company… in 48 questions. I don’t know anyone who would get past 12.

If you are planning longer surveys, carefully consider the purpose of the questions and how they will help assess customer satisfaction.

A well-crafted survey template is like a compelling short story that we want to read until the end out of curiosity.

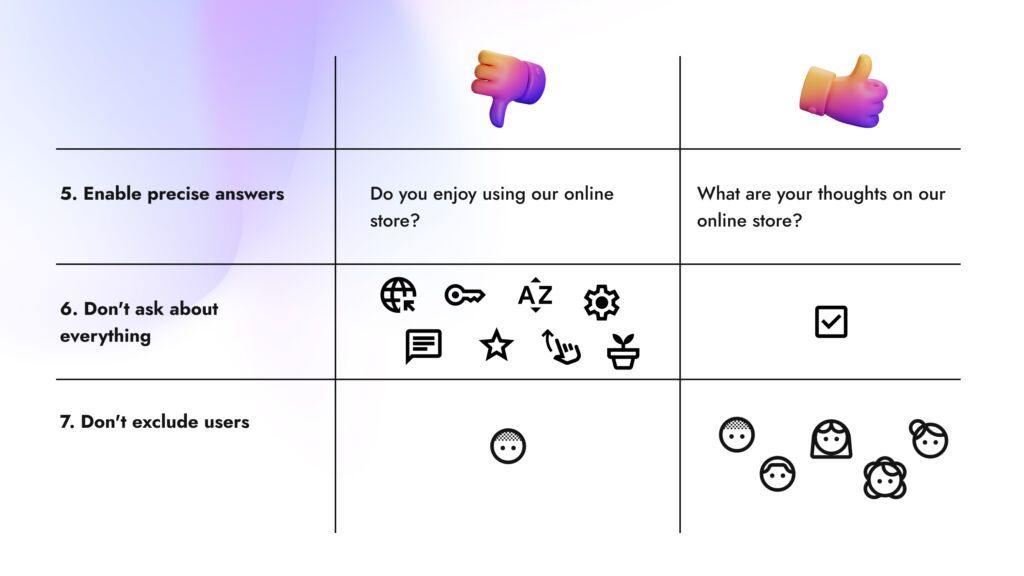

5. Enable precise answers

Questions with too few answer options will produce unreliable data that does not meet our standards. Don’t dismiss responses such as “I don’t know”, “I have no opinion”, or allowing the user to add their own answer.

Anti-example:

The one I like the most is: “Do you enjoy using our online store?”. And two possible answers: Yes or No. If users are asked to rate your website or the quality of your services, allow them to express their thoughts fully.

Putting aside the fact that our main purpose for using online stores is to buy things, rather than just casually browsing…

6. Don’t ask about everything because you won’t learn anything

Focus on what’s most important to your business or what you don’t know. You are researching customer satisfaction, not writing an encyclopedia.

- Not sure about the purchasing process? Ask about the shopping cart.

- Implemented a new website design? Ask if the user can find all the information they need.

It is worth asking about those factors that we have not yet had a chance to understand well.

7. Do not exclude users

Unless your customer satisfaction survey targets only a carefully selected group of customers. In a comprehensive study, it’s best to avoid questions such as: “How would you rate your skin after 6 months of using our product?”. What if they’ve been using it for a month?

When to ask about customer satisfaction?

When seeking information on customer satisfaction, it’s important to take a comprehensive approach and consider when the user had the chance to thoroughly reflect on their experience with the product.

Anti-example:

We often come across surveys, after making a purchase but before receiving the delivery, at a time when we haven’t formed an opinion about the store yet because we haven’t received the goods. The survey that pops up on the homepage a few seconds after opening it also does not allow me to evaluate the experience.

So when is the best time to measure customer satisfaction?

For instance, in the case of e-commerce, it is worth asking to fill out a survey after a successful purchase or confirmation of product delivery, or shortly thereafter. When introducing new features, you can, like Miro does, ask for feedback immediately after its first use. Ask how it was and if they like the new solution.

For loyal users, the survey can be “smuggled” in summaries. For example, Spotify does it, summarising the music from the whole year and asking about the favourite moments with the app. This allows you to reflect on the entire experience.

The Zeigranik effect — Why do we like to complete our tasks?

In short: Uncompleted tasks linger with us longer than those completed successfully.

This phenomenon was studied as early as the beginning of the 20th century. That’s why using a progress bar or breaking down processes into steps effectively motivates the user to take action.

How to use this effect in research?

- Pose the initial question in the email or survey window on the website, and suggest to the user to complete it by clicking a link.

- Imply that since they had time for the first question, answering the following ones will take just a moment.

Are you satisfied? Start asking questions!

As you can see, customer satisfaction research can be planned and learned from the best techniques practised in other industries. You can also learn from the mistakes of the competition. When designing a survey from a UX/UI perspective, it’s crucial to remember that another human being will be on the receiving end.

Sources:

https://www.webankieta.pl/blog/nps-wskazowki-prowadzic-badania-satysfakcji-klienta/

https://uk.surveymonkey.com/mp/bad-survey-questions-examples/